WMR testing during development

Unity provides tools that allow you to test applications during the development process. These tools allow you to debug your application as if it was any other Unity application, as well as wirelessly pushing content to your device. Some of these tools only work with specific WMR devices, such as HoloLensAn XR headset for using apps made for the Windows Mixed Reality platform. More info

See in Glossary.

Play in Editor (immersive devices)

When using an immersive Windows Mixed RealityA mixed reality platform developed by Microsoft, built around the API of Windows 10. More info

See in Glossary device, you can test and iterate on your application directly in the Unity Editor. Make sure the Windows Mixed Reality Portal is open and the headset is active, then press the Play button in the Editor. Now your application runs within the Editor while also renderingThe process of drawing graphics to the screen (or to a render texture). By default, the main camera in Unity renders its view to the screen. More info

See in Glossary to the headset.

Known limitations

There are a couple of limitations to consider when using Play in the Editor with Mixed Reality devices:

Keyboard, mouse, and spatial controllers work in the Editor, as long as they are recognized by Windows and Unity.

Non-Windows Mixed Reality input devices only work when the game window has focus, otherwise keyboard and mouse controls may not work correctly.

Holographic Emulation (HoloLens only)

Holographic Emulation allows you to prototype, debug, and run Microsoft HoloLens projects directly in the Unity Editor rather than building and running your application every time you wish to see the effect of an applied change. This vastly reduces the time between iterations when developing HoloLens applications in Unity.

Holographic Emulation has three different modes:

Remote to Device: Using a connection to a Windows HolographicThe former name for Windows Mixed Reality. More info

See in Glossary device, your application behaves as if it were deployed to that device, while in reality it is running in the Unity Editor on your host machine. For more information, see the section Emulation Mode: Remote to device, below.Simulate in Editor: Your application runs on a simulated HoloLens device directly in the Unity Editor, with no connection to a real-world Windows Holographic device. For more information, see the section Emulation Mode: Simulate in Editor, below.

None: This setting allows you to still run your application in the Editor if you have a Windows Holographic device.

Holographic emulation is supported on any machine running Windows 10 (with the Fall Creators update). Both Remote to Device and Simulate in Editor aim to mimic the current HoloLens runtime as closely as possible, so newer APIs beyond this are not present. This means that recent additions to input code don’t work in these situations, just like they wouldn’t on a HoloLens. For example, rotation, position accuracy, angular velocity, and the basis vectors for a pose of forward, up, and right, are all absent, so those APIs fail.

To enable remoting or simulation, open the Unity Editor and go to Window > XRAn umbrella term encompassing Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) applications. Devices supporting these forms of interactive applications can be referred to as XR devices. More info

See in Glossary > Holographic Emulation.

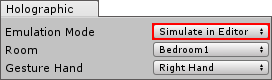

This opens the HolographicThe former name for Windows Mixed Reality. More info

See in Glossary control window, which contains the Emulation Mode drop-down menu. You must keep this window visible during development, so that you can access its settings when you launch your application.

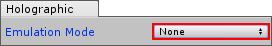

Emulation Mode is set to None by default, which means that if you have an immersive headset connection, your application runs in the Editor and plays on the HMD. See the Play in Editor section above for more details.

Change the Emulation Mode to Remote to Device or Simulate in Editor to enable emulation for HoloLens development. This overrides Play in Editor support for any connected immersive device.

Emulation Mode: Remote to Device

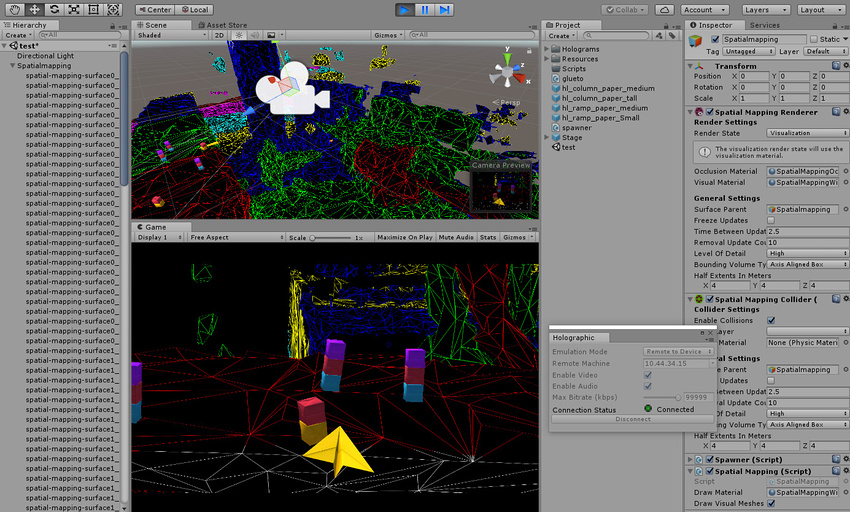

Holographic Remoting allows your application to behave as if it were deployed to a HoloLens device, while actually running in the Unity Editor on the host machine. Even while remoting, spatial sensor data and head tracking from the connected device are still active and work correctly. The Unity Editor Game view allows you to see what is being rendered on the device, but not what the wearer of the device sees of the real-world. (See an example in the image above titled ‘The Unity Editor running with Holographic Emulation’.)

Note: Holographic Remoting is a quick way to iterate over changes during development, but you should avoid using it to validate performance. Your application is running on the host machine rather than the device itself, so you might get inaccurate results.

To enable this mode in the Unity Editor, set the Emulation Mode to Remote to Device. The Holographic window then changes to reflect the additional settings available with this mode.

Connecting your device

To remote to a HoloLens device, you need to install the Holographic Remoting Player on your HoloLens device.

To install the Holographic Remoting Player application and configure the Unity Editor:

-

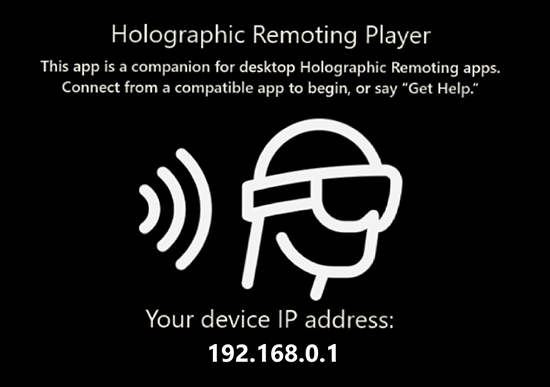

Install and run the Holographic Remoting Player from your HoloLens store. This is available from the Windows Store app on your Hololens. When launched, the remoting player enters a waiting state and displays the IP address of the device on the HoloLens screen:

The Holographic Remoting Player screen For additional information about this Player, including how to enable connection diagnostics, see the Microsoft Windows Dev Center.

-

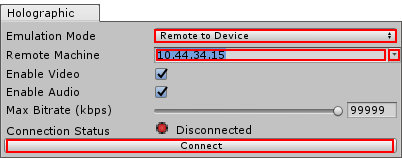

Enter the IP address of your HoloLens device into the Remote Machine field from the Holographic window in the Unity Editor. The drop-down button to the right of the field allows you to select recently used addresses:

Configuring Remote to Device emulation mode Click the Connect button. The connection status should change to a green light with a connected message.

Click Play in the Unity Editor to run your device remotely.

When remoting starts, you can pause, inspect GameObjectsThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary, and debug in the same way as if you were running any app in the Editor. The HoloLens device and your host machine transmit video, audio, and device input back and forth across the network.

Known limitations

There are a couple of limitations you should consider when using Remote to Device emulation with HoloLens:

Unity does not support Speech (PhraseRecognizer) when using Remote to Device emulation mode. When in this mode, the emulator intercepts speech from the host machine running the Unity Editor. The application uses the microphone in the PC you are remoting from, instead of the Hololens microphone.

While Remote to Device is running, all audio on the host machine redirects to the device, including audio from outside your application.

Emulation Mode: Simulate in Editor

When using this emulation mode, your application runs on a simulated Holographic device directly in the Unity Editor, with no connection to a real-world HoloLens device. This is useful when developing for Windows Holographic if you do not have access to a HoloLens device.

Note: You should test your application on a HoloLens device to ensure it works as expected. Don’t rely exclusively on emulation during development.

To enable this mode, set the Emulation Mode to Simulate in Editor and click the Play button. Your application then starts in an emulator built into the Unity Editor.

In the Holographic Emulation control window, use the Room drop-down menu to choose an available virtual room (the same as those supplied with the XDE HoloLens Emulator). Use the Gesture Hand drop-down menu to specify which virtual hand performs gestures (left or right).

In Simulate in Editor mode, you need to use a game controllerA device to control objects and characters in a game.

See in Glossary (such as an Xbox 360 or Xbox One controller) to control the virtual human player. The simulation still works if you do not have a controller, but you cannot move the virtual human player around.

The table below lists the controller inputs and their usage during Simulate in Editor mode.

| ControlA function for displaying text, buttons, checkboxes, scrollbars and other features on the user interface. More info See in Glossary |

Usage |

|---|---|

| Left-stick | Up and down move the virtual human player backward and forward. Left and right move the human player left and right. |

| Right-stick | Up and down rotate the virtual human player’s head up and down (rotation about the X axis - Pitch). Left and right turn the virtual human player left and right (rotation about the Y axis - Yaw). |

| D-pad | Move the virtual human player up and down or tilt the player’s head left and right (rotation about the Z axis - Roll ). |

| Left and right trigger buttons or A button | Perform a tap gesture with a virtual hand. |

| Y button | Reset the pitch (X rotation) and roll (Z rotation) of the virtual human player’s head. |

To use a game controller, focus the Unity Editor on the Game view. If you are currently focused on another Unity Editor window, click on the Game view window to refocus on the Game view.

Known limitations

There are a couple of limitations you should consider when using Simulate in Editor emulation with HoloLens:

Most game controllers work in Simulate in Editor mode, as long Windows and Unity recognise them. However, unsupported controllers might cause compatibility issues.

You can use PhotoCaptureAn API that enables you to take photos from a HoloLens web camera and store them in memory or on disk. More info

See in Glossary during Simulate in Editor mode, but you must use an externally connected cameraA component which creates an image of a particular viewpoint in your scene. The output is either drawn to the screen or captured as a texture. More info

See in Glossary (such as a webcam) due to the lack of a connected HoloLens device. This also prevents you from retrieving a matrix withTryGetProjectionMatrixorTryGetCameraToWorldMatrix, because a normal external camera cannot compute where it is in relation to the real world.

Useful resources and troubleshooting

When troubleshooting development issues for Windows Mixed Reality applications, you might find these external resources useful:

2018–03–27 Page published

New content added for XR API changes in 2017.3

Did you find this page useful? Please give it a rating: