VR overview

Unity VR lets you target virtual reality devices directly from Unity, without any external plug-insA set of code created outside of Unity that creates functionality in Unity. There are two kinds of plug-ins you can use in Unity: Managed plug-ins (managed .NET assemblies created with tools like Visual Studio) and Native plug-ins (platform-specific native code libraries). More info

See in Glossary in projects. It provides a base API and feature set with compatibility for multiple devices. It provides forward compatibility for future devices and software.

By using the native VR support in Unity, you gain:

- Stable versions of each VR device

- A single API interface to interact with different VR devices

- A clean project folder with no external plugin for each device

- The ability to include and switch between multiple devices in your applications

- Better performance (Lower-level Unity engine optimizations are possible for native devices)

Enabling Unity VR support

To enable native VR support for your game builds and the Editor, open the Player SettingsSettings that let you set various player-specific options for the final game built by Unity. More info

See in Glossary (menu: Edit > Project SettingsA broad collection of settings which allow you to configure how Physics, Audio, Networking, Graphics, Input and many other areas of your Project behave. More info

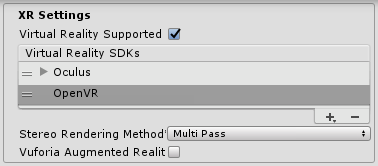

See in Glossary > Player). Select XR Settings and check the Virtual Reality Supported checkbox. Set this for each build target. Enabling virtual reality support in a standalone build doesn’t also enable the support for Android (and vice-versa).

Use the Virtual Reality SDKs list below the checkbox to add and remove VR devices for each build target. The order of the list is the order in which Unity tries to enable VR devices at runtime. The first device that initializes properly is the one enabled. This list order is the same in the built Player.

Built applications: Choosing startup device

Your built application initializes and enables devices in the same order as the Virtual Reality SDKs list in XR Settings (see Enabling VR Support, above). Devices not present in the list at build time are not available in the final build; the exception to this is None. Device None is equivalent to a non-VR application (that is, a normal Unity application) and can be switched to during runtime without including it in the list.

If you include None as a device in the list, the application can default to a non-VR application before it attempts to initialize a VR device. If you place None at the top of the list, the application starts with VR disabled. Then, you can then enable and disable VR devices that are present in your list through script using XR.XRSettings.LoadDeviceByName.

If initialization of the device you attempt to switch to fails, Unity disables VR with that device still set as the active VR device. When you switch devices (XRSettings.LoadDeviceByName) or enable XRAn umbrella term encompassing Virtual Reality (VR), Augmented Reality (AR) and Mixed Reality (MR) applications. Devices supporting these forms of interactive applications can be referred to as XR devices. More info

See in Glossary (XR.XRSettings-enabled), the built application attempts to initialize again.

Use the following command line argument to launch a specific device:

-vrmode DEVICETYPE

where DEVICETYPE is one of the names from the supported XR supported devices list.

Example: MyGame.exe -vrmode oculus

What happens when VR is enabled

When VR is enabled in Unity, a few things happen automatically:

Automatic rendering to a head-mounted display

All CamerasA component which creates an image of a particular viewpoint in your scene. The output is either drawn to the screen or captured as a texture. More info

See in Glossary in your SceneA Scene contains the environments and menus of your game. Think of each unique Scene file as a unique level. In each Scene, you place your environments, obstacles, and decorations, essentially designing and building your game in pieces. More info

See in Glossary can render directly to the head-mounted display (HMD). Unity automatically adjusts View and Projection matrices to account for head tracking, positional tracking and field of view.

It is possible to disable renderingThe process of drawing graphics to the screen (or to a render texture). By default, the main camera in Unity renders its view to the screen. More info

See in Glossary to the HMD using the Camera component’s stereoTargetEye property. Alternatively, you can set the Camera to render to a Render TextureA special type of Texture that is created and updated at runtime. To use them, first create a new Render Texture and designate one of your Cameras to render into it. Then you can use the Render Texture in a Material just like a regular Texture. More info

See in Glossary using the Target Texture property.

- Use the

stereoTargetEyeproperty to set the Camera to only render a specific eye to the HMD. This is useful for special effects such as a sniper scope or stereoscopic videos. To achieve this, add two Cameras to the Scene: one targeting the left eye, the other targeting the right eye. Set layer masksA value defining which layers to include or exclude from an operation, such as rendering, collision or your own code. More info

See in Glossary to configure what Unity sends to each eye.

Automatic head-tracked input

Unity versions newer than 2019.1 no longer support automatic head tracking for head-mounted devices. Use the Tracked Pose Driver feature of the XR Legacy Input Helpers package to implement this functionality.

You can install the XR Legacy Input Helpers package using the Package Manager window.

Understand the Camera

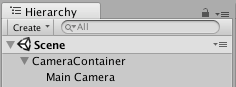

Unity overrides The Camera Transform with the head-tracked pose. To move or rotate the Camera, attach it as a child GameObjectThe fundamental object in Unity scenes, which can represent characters, props, scenery, cameras, waypoints, and more. A GameObject’s functionality is defined by the Components attached to it. More info

See in Glossary of another GameObject. This makes it so that all Transform changes to the parent of the Camera affect the Camera itself. This also applies to moving or rotating the camera using a script.

Think of the Camera’s position and orientation as where the user is looking in their neutral position.

There are differences between seated and room-scale VR experiences:

- If your device supports a room-scale experience, the Camera’s starting position is the center of the user’s play space.

- Using the seated experience, you can reset Cameras to the neutral position using XR.InputTracking.Recenter().

Each Camera that is rendering to the device automatically replaces the FOV of the Camera with the FOV that the user input in the software settings for each VR SDK. The user cannot change the field of view during runtime, because this behaviour is known to induce motion sickness.

Editor Mode

If your VR device supports Unity Editor mode, press Play in the Editor to test directly on your device.

The left eye renders to the Game View window if you have stereoTargetEye set to left or both. The right eye renders if you have stereoTargetEye set to right.

There is no automatic side-by-side view of the left and right eyes. To see a side-by-side view in the Game View, create two Cameras, set one to the left and one to the right eye, and set the viewportThe user’s visible area of an app on their screen.

See in Glossary of display them side by side.

Note that there is overhead to running in the Editor, because Unity IDE needs to render each window, so you might experience lag or judder. To reduce editor rendering overhead, open the Game View and enable Maximize on Play.

The Unity Profiler is a helpful tool to get an idea of what your performance should be like when it runs outside of the Editor. However, the profilerA window that helps you to optimize your game. It shows how much time is spent in the various areas of your game. For example, it can report the percentage of time spent rendering, animating or in your game logic. More info

See in Glossary itself also has overhead. The best way to review game performance is to create a build on your target platform and run it directly. You can see the best performance when you run a non-development build, but development buildsA development build includes debug symbols and enables the Profiler. More info

See in Glossary allow you to connect the Unity profiler for better performance profiling.

Hardware and software recommendations for VR development in Unity

Hardware

Achieving a frame rate similar to your target HMD is essential for a good VR experience. This must match the refresh rate of the display used in the HMD. If the frame rate drops below the HMD’s refresh rate, it is particularly noticeable and often leads to nausea for the player.

The table below lists the device refresh rates for common VR headsets:

| VR Device | Refresh Rate |

|---|---|

| Gear VR | 60hz |

| OculusA VR platform for making applications for Rift and mobile VR devices. More info See in Glossary Rift |

90hz |

| Vive | 90hz |

Software

- Windows: Windows 7, 8, 8.1, and Windows 10 are all compatible.

- Android: Android 5.1 (Lollipop) or higher.

- macOS:

- Oculus: macOS 10.9+ with the Oculus 0.5.0.1 runtime. However, Oculus have paused development for macOS, so use Windows for native VR functionality in Unity.

- HTC Vive: macOS 10.13.

- Graphics card drivers: Make sure your drivers are up to date. Every device is keeping up with the newest drivers, so older drivers may not be supported.

Device runtime requirements

Each VR device requires that you have appropriate runtime installed on your machine. For example, to develop and run Oculus within Unity, you need to have the Oculus runtime (also known as Oculus Home) installed on your machine. For Vive, you need to have Steam and SteamVR installed.

Depending on what version of Unity you are using, the runtime versions for each specific device that Unity supports may differ. You can find runtime versions in the release notes of each major and minor Unity release.

With some version updates, previous runtime versions are no longer supported. This means that native Unity VR support does not work with earlier runtime versions, but continues to work with new runtime versions.

Unity native VR support does not read plug-ins from within your project’s folder, so including earlier versions of the plug-in with native support fails if you have VR support enabled. If you wish to use an earlier version with a release of Unity that no longer supports that version, disable Native VR Support (go to XR Settings and uncheck Virtual Reality Supported). You can then access the plug-in like any other 3rd party plugin. See the section above on Enabling Unity VR Support for more details.

- Automatic head tracking support removed in 2019.1.

NewIn20191

Did you find this page useful? Please give it a rating: