Single-Pass Stereo Rendering for HoloLens

There are two stereo renderingThe process of drawing graphics to the screen (or to a render texture). By default, the main camera in Unity renders its view to the screen. More info

See in Glossary methods for Windows HolographicThe former name for Windows Mixed Reality. More info

See in Glossary devices (HoloLens); multi-pass and single-pass instanced.

Multi-pass

Multi-pass rendering runs 2 complete render passes (one for each eye). This generates almost double the CPU workload compared to the single-pass instanced rendering method. However this method is the most backwards compatible and doesn’t require any shaderA small script that contains the mathematical calculations and algorithms for calculating the Color of each pixel rendered, based on the lighting input and the Material configuration. More info

See in Glossary changes.

Single-pass Instanced

Instanced rendering performs a single render pass where each draw call is replaced with an instanced draw call. This heavily decreases CPU utilization. Additionally this slightly decreases GPU utilization due to the cache coherency between the two draw calls. In turn your app’s power consumption will be much lower.

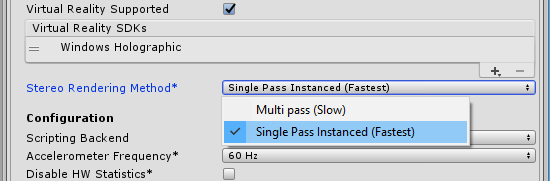

To enable this feature, open the Player settings (menu: Edit > Project SettingsA broad collection of settings which allow you to configure how Physics, Audio, Networking, Graphics, Input and many other areas of your Project behave. More info

See in Glossary, then select the Player category). Then navigate to the Other Settings panel, enable the Virtual Reality Supported property, then select Single Pass Instanced (Fastest) from the Stereo Rendering Method dropdown menu.

Unity defaults to the slower Multi pass (Slow) setting as you may have custom shaders that do not have the required code in your scriptsA piece of code that allows you to create your own Components, trigger game events, modify Component properties over time and respond to user input in any way you like. More info

See in Glossary to support this feature.

Shader script requirements

Any non built-in shaders will need to be updated to work with instancing. Please read this documentation to see how this is done: GPU Instancing. Furthermore, you’ll need to make two additional changes in the last shader stage used before the fragment shaderThe “per-pixel” part of shader code, performed every pixel that an object occupies on-screen. The fragment shader part is usually used to calculate and output the color of each pixel. More info

See in Glossary (Vertex/Hull/Domain/Geometry). First, you will have to add UNITY_VERTEX_OUTPUT_STEREO to the output struct. Second, you will need to add UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO() in the main function for that stage after UNITY_SETUP_INSTANCE_ID() has been called.

Post-Processing Shaders

You will need to add the UNITY_DECLARE_SCREENSPACE_TEXTURE(tex) macro around the input texture declarations, so that 2D texture arrays will be properly declared. Next, you must add a call to UNITY_SETUP_INSTANCE_ID() at the beginning of the fragment shader. Finally, you will need to use the UNITY_SAMPLE_SCREENSPACE_TEXTURE() macro when sampling those textures. See HLSLSupport.cginc for more information on other similar macros depth textures and screen space shadow maps.

Here’s a simple example that applies all of the previously mentioned changes to the template image effect:

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

UNITY_INSTANCE_ID

};

struct v2f

{

float2 uv : TEXCOORD0;

float4 vertex : SV_POSITION;

UNITY_INSTANCE_ID

UNITY_VERTEX_OUTPUT_STEREO

};

v2f vert (appdata v)

{

v2f o;

UNITY_SETUP_INSTANCE_ID(v);

UNITY_TRANSFER_INSTANCE_ID(v, o);

UNITY_INITIALIZE_VERTEX_OUTPUT_STEREO(o);

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = v.uv;

return o;

}

UNITY_DECLARE_SCREENSPACE_TEXTURE(_MainTex);

fixed4 frag (v2f i) : SV_Target

{

UNITY_SETUP_INSTANCE_ID(i);

fixed4 col = UNITY_SAMPLE_SCREENSPACE_TEXTURE(_MainTex, i.uv);

// just invert the colors

col = 1 - col;

return col;

}

DrawProceduralIndirect

Graphics.DrawProceduralIndirect() and CommandBuffer.DrawProceduralIndirect() get all of their arguments from a compute buffer, so we can’t easily increase the instance count. Therefore you will have to manually double the instance count contained in your compute buffers.

See the Vertex and fragment shader examples page for more information on shader code.

- 2017–09–01 Page amended

- New feature in 5.5

- Fixed example code for single pass stereo rendering for HoloLens

Did you find this page useful? Please give it a rating: